In the winter of 1936, a groundbreaking treatise on mathematical logic was published by a young Alan Mathison Turing, titled "On Computable Numbers, with an application to the Entscheidungsproblem." This work, which initially appeared relevant to only a small circle of mathematicians who grasped its complex title, would later be recognized as a cornerstone in the development of computer science. Turing's exploration of computability and the limits of mathematical logic had a profound impact on the field, comparable to the seminal contributions of Boole and Shannon.

In his treatise, Turing presented a set of conceptual results based purely on logic and mathematics, making no mention of electronics or computers. These results proved to be fundamental to the development of modern computers and continue to shape our thought processes today.

In essence, the modern-day computer is a realization of "Turing's Universal Machine." It's challenging to conceptualize a computer that operates fundamentally differently from Turing's model.

The modern conception of a computer as a versatile, multipurpose device is largely due to the influence of Turing's Universal Machine. Today, it seems absurd to imagine having separate, dedicated machines for word processing, accounting, chess, or other specific tasks. However, in the early days of computing, limited computational power made it difficult to envision a machine that could handle more than a narrow set of predefined operations. Turing's theoretical framework revolutionized this perspective, demonstrating that a single machine, equipped with the right set of instructions, could simulate the functions of countless other machines. This paved the way for the development of the general-purpose computers we rely on today, capable of performing a wide range of tasks simply by changing its software.

Turing's Universal Machine, at its core, is a theoretical system composed of a set of symbols, a mechanism for manipulating these symbols, and a predefined set of rules governing the symbol manipulation. This system is analogous to an abacus, where the beads represent symbols and the way they are moved corresponds to the rules. Just as the abacus can perform different calculations based on how the beads are manipulated, the Universal Machine can exhibit different logical behaviors depending on the rules it follows.

The key insight of Turing's work was that any formal system, characterized by a set of symbols and rules, could be simulated by his Universal Machine. Mathematical operations, for instance, are formal systems with their own symbols (numbers) and rules (addition, subtraction, etc.). By appropriately defining the symbols and rules within the Universal Machine, it could effectively mimic the behavior of these mathematical systems.

This concept extends beyond mathematical operations. A computer, in Turing's view, is not merely a collection of circuits but a manifestation of this universal machine paradigm. It is the embodiment of the idea that a single device, through the application of different instructions (rules), can replicate the functions of a vast array of other machines or systems. This revolutionary idea transformed the landscape of computer science, leading to the development of versatile, programmable machines capable of performing a wide range of tasks. Turing's Universal Machine thus emerged as a pivotal concept, demonstrating the potential of a single machine to simulate any formal system by simply changing its instructions.

The concept of a "program" that can modify the behavior of a machine is commonplace today. However, this idea was once revolutionary, requiring a significant shift in thinking. It was akin to envisioning a universal machine capable of mimicking the functions of various devices, such as a food mixer, VCR, motorcycle, or hairdryer, simply by altering its operational rules. This shift in perspective was essential to the development of modern computing, as it allowed us to move beyond specialized machines with fixed functions and towards the flexible, general-purpose computers we use today. The ability to reprogram a machine to perform different tasks opened up a world of possibilities, transforming computers into versatile tools that could be adapted to countless applications.

Charles Babbage's concept of an "Analytical Engine," though largely forgotten after his death, was remarkably similar to Turing's Universal Machine. Babbage, constrained by the technological limitations of his era, described his vision of a universally programmable calculator using the language of mechanics. A century later, Turing, unburdened by these constraints and a decade before the advent of transistors, framed his ideas in the language of logic and mathematics. This shift in perspective allowed Turing to transcend the mechanical limitations that had confined Babbage, paving the way for a more abstract and powerful conceptualization of computation.

if (year < 1947) {

language = "mechanics"; // Babbage's era

} else {

language = "logic and mathematics"; // Turing's era

}

Turing laid the logical foundation for his "universal symbol handler," leaving subsequent generations of engineers to implement the practical aspects. Building upon the work of Boole and Shannon, these engineers adopted the binary symbols "1" and "0" (replacing Turing's circles and crosses) and established the use of transistors to represent these symbols electrically through the presence or absence of current. This ultimately led to the development of the microprocessor as the physical embodiment of Turing's "symbol handler."

In 1940, Alan Turing's groundbreaking ideas caught the attention of the British government. Recognizing the potential of his work, the British intelligence service enlisted Turing and a team of brilliant mathematicians and scientists to form a clandestine group known as the Codebreakers. Their mission, codenamed Ultra, was to crack the Enigma code, a formidable encryption system employed by the Nazi military to secure their communications during World War II. The genesis of this top-secret project can be traced back to a daring intelligence operation in which an officer, known only as Intrepid, managed to obtain an Enigma machine. However, the acquisition of the machine was merely the first step in a complex and challenging endeavor.

While Intrepid's acquisition of the Enigma machine was a significant breakthrough, it was not enough to crack the complex code. The Enigma's intricate encryption mechanism proved to be a formidable challenge, even for the most skilled cryptanalysts of the time. The machine's settings could be changed daily, resulting in an astronomical number of possible combinations that made brute-force decryption practically impossible. This presented a major obstacle for the British, as breaking the Enigma code was crucial to gaining an advantage in the war.

Driven by the imperative to decipher the encrypted German communications, the British Army High Command assembled a team of the era's most brilliant minds, including a 28-year-old Alan Turing. This group, known as the Codebreakers, was tasked with unraveling the Enigma code. They convened in Bletchley Park, a Victorian estate situated under strict surveillance midway between the universities of Oxford and Cambridge, providing a secluded and secure environment for their critical work.

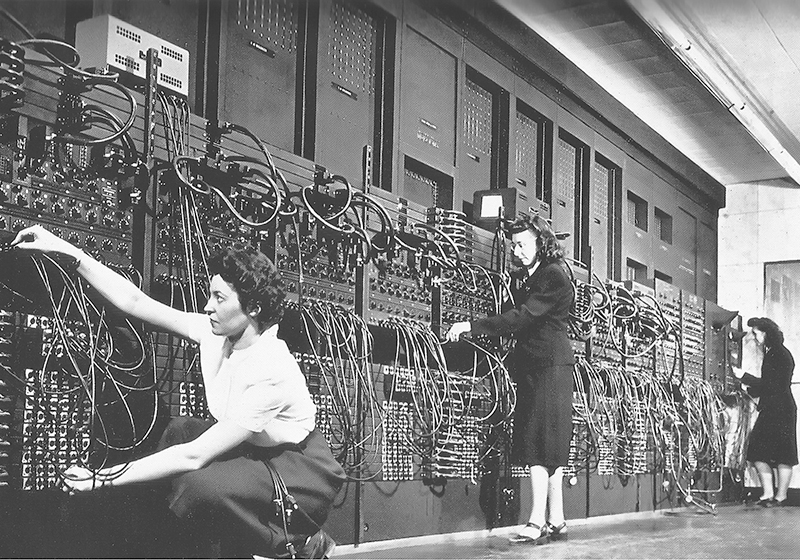

In response to the challenge posed by German cryptography, the Codebreakers developed Colossus, a groundbreaking calculator built in 1943. While not a full realization of Turing's Universal Machine, Colossus incorporated many of Turing's concepts and significantly advanced the field of computation. This massive machine, occupying an entire room and constructed primarily from repurposed telephone and telegraph components, utilized cutting-edge vacuum tubes to perform complex cryptanalysis. By employing a photoelectric system, Colossus could rapidly read encrypted messages from teleprinter tapes at a rate of 5,000 letters per second, providing invaluable assistance to the Codebreakers in their efforts to decipher German communications.

Throughout World War II, Colossus, the codebreaking machine developed at Bletchley Park, played a pivotal role in deciphering German encrypted messages. This allowed the Allies to gain crucial insights into enemy strategies and movements, ultimately contributing significantly to the outcome of the war. Despite the Enigma machine's perceived invincibility, the tireless efforts of Alan Turing and his team of codebreakers, driven by a passion for mathematics and logic, successfully broke its code.

However, the critical contributions of these brilliant minds remained shrouded in secrecy for decades. Even today, while the D-Day invasion is widely remembered, the names of the scientists who made it possible through their codebreaking achievements are largely unknown. This irony underscores the hidden nature of their work, as the secrecy surrounding the Ultra project prevented them from receiving public recognition for their vital role in the Allied victory. The very existence of Colossus and its groundbreaking decryption algorithms were kept classified until the 1970s and 1990s, respectively. Today, a replica of Colossus stands as a testament to this remarkable feat of ingenuity and perseverance at Bletchley Park.

Following the war, Turing's scientific pursuits persisted, leading to the publication of several influential works that would later be recognized as pioneering contributions to the field of computer programming. Among these, his most significant publication is the 1950 paper "Computing Machinery and Intelligence." This work, notably devoid of mathematical formulae and written in accessible language, presents a series of observations that have had a profound impact on cultural, intellectual, and scientific discourse. The paper's opening line, "I propose to consider the question, 'Can machines think?'", is both direct and thought-provoking. Turing, relying solely on logic, introduced the concept of the "Imitation Game" (later known as the Turing Test) to explore the question of machine intelligence. This test would become a cornerstone in the philosophy of artificial intelligence and continues to spark debate today.

The "Imitation Game," as conceived by Turing, involves a human interrogator interacting with two entities concealed from view: one human and one machine. The interrogator communicates with both entities through a text-based interface, such as a keyboard and screen. By posing a series of questions and analyzing the responses, the interrogator must determine which entity is the human and which is the machine.

The core idea behind the game is that if a machine can consistently produce responses that are indistinguishable from those of a human, then it can be considered to possess a form of intelligence. This ability to simulate human-like conversation, even if it's just an illusion, is the benchmark by which Turing proposed to measure the "intelligence" of a machine.

In his seminal paper, Turing boldly predicted the future of artificial intelligence. He envisioned a time when computers would be able to play the Imitation Game so convincingly that an average person would have no better than a 70% chance of correctly identifying the machine after five minutes of questioning. Turing considered the question of whether machines can truly "think" to be too ambiguous for meaningful discussion. However, he anticipated that by the end of the 20th century, societal attitudes and language would evolve to the point where attributing thought to machines would become commonplace and uncontroversial.

Over half a century after Alan Turing published "Computing Machinery and Intelligence," his insights on artificial intelligence continue to resonate. We often find ourselves humbled by computers' ability to "think" strategically, as evidenced by our struggles against chess-playing algorithms. As technology advances, we will likely encounter more instances where machines exhibit cognitive abilities that challenge our perceived superiority. These experiences may gradually validate Turing's assertions about the potential of machines to mimic human thought processes.

Alan Turing's brilliant life was tragically cut short on June 7, 1954, when he was just 42 years old. Turing, like many other great artists and scientists, was homosexual. In the early 1950s, a wave of homophobia swept through England, fueled by Cold War paranoia and the defection of two homosexual MI6 agents to the Soviet Union. This led to a witch-hunt targeting the LGBTQ+ community, and Turing, despite his significant contributions to his country, was not spared from persecution.

Even a genius like Alan Turing was not immune to the brutal persecution unleashed against English homosexuals. In the early 1950s, he became embroiled in a witch-hunt fueled by Cold War paranoia and the defection of two homosexual MI6 agents. This resulted in his arrest, trial, and conviction for "gross indecency." As part of his probation, Turing was subjected to a humiliating and debilitating chemical castration therapy, a punishment inflicted by the very institutions he had served with distinction during World War II. The irony of this situation was compounded by the fact that Turing's wartime contributions remained classified information and could not be used in his defense. He spent his final years haunted by the injustice he had endured, eventually taking his own life in 1954.

While we will never know the full extent of Turing's suffering, his legacy lives on through the countless "Universal Machines" that populate our world today. These machines, increasingly sophisticated and human-like in their capabilities, serve as a testament to his visionary genius. It is a source of hope that, wherever he may be, Alan Turing can now find solace in the knowledge that his ideas have not only shaped the world of computing but also contributed to a growing acceptance and understanding of human diversity.