Should we fear or welcome military AI? Colonel Tucker Hamilton, a top US Air Force AI expert, shares his thoughts on the matter. He acknowledges that AI in the military is a powerful tool that can enhance efficiency and decision-making, but emphasizes the importance of using it responsibly. According to Colonel Hamilton, we should neither fear AI nor blindly embrace it. Instead, careful planning, ethical guidelines, and human oversight are crucial to ensure that AI serves humanity rather than becoming a threat.The US Department of Defense introduced a system called AGCAS in the early 2000s. AGCAS, designed to prevent F-16 fighter jets from crashing into the ground by taking control from the pilot at the last moment, initially faced resistance from pilots. Despite this, AGCAS has proven its worth over time, saving 12 aircrew members between 2014 and 2022. The technology has become so essential that pilots now rarely fly without it. The story of AGCAS highlights the complex nature of trust in technology, particularly when it's misunderstood or sensationalized, as is often the case with artificial intelligence (AI).AGCAS, a life-saving technology, wasn't initially used in F-16s because pilots didn't understand it or trust it. It wasn't until 2014, after the military made it mandatory, that pilots began using it regularly. Since then, AGCAS has saved 12 pilots, and now, it’s rare for a pilot to fly without it. This evolution underscores how new technology, even life-saving innovations like AGCAS or AI, can face significant resistance if people don't fully understand them.

Understanding the Pitfalls

I admit I may have unintentionally contributed to some misunderstandings during my talk at the 2023 Royal Aeronautical Society Summit. My goal wasn't to create confusion. Instead, my 25-minute presentation focused on the importance of recognizing the potential downsides of AI so that society can fully benefit from its possibilities. AI comes with a range of significant challenges, but there are also ways we can build trust in this technology to address them.

It's crucial to approach AI development and deployment with a clear understanding of its limitations and risks, such as bias, job displacement, and misuse. By proactively addressing these challenges, we can harness the full power of AI for the betterment of society.

The Pitfalls of Reward Optimization: Unintended Consequences in AI Development

A major challenge in AI development is understanding and addressing the complexities of 'reward optimization.' This concept describes a situation where an AI system, in its pursuit of maximizing rewards, might inadvertently take actions that lead to unintended and potentially harmful consequences. It's akin to a student solely focusing on getting good grades, even if it means sacrificing true learning or engaging in unethical practices.

Developers are well aware of the potential pitfalls of reward optimization, but predicting the exact nature of these unintended consequences is akin to navigating a 'known-unknown.' It's like knowing a storm is coming, but being unable to foresee the precise path of destruction it might take.

To mitigate this challenge, developers employ a multi-pronged approach involving meticulous planning, rigorous testing, and continuous validation throughout the AI development lifecycle. It's about building safeguards into the system, like installing lightning rods on a building to protect it from the unpredictable strikes of a storm.

My story served as a cautionary tale, underscoring the importance of proactively addressing reward optimization. While the solutions I presented resonated with the audience during my talk, the core message got diluted when taken out of context.

Instead of pointing fingers at the media, I acknowledge my own responsibility in not articulating the message with sufficient clarity. As the speaker, the onus is on me to ensure the audience grasps the essence of my communication, regardless of the context in which it's shared.

Elevating the Conversation: Addressing AI Anxiety

We need to have a more mature and nuanced conversation about AI. It's easy to get caught up in the hype and fear surrounding this rapidly advancing technology, but we must approach it with a balanced perspective. Can AI, in its current or future iterations, inadvertently lead to consequences that harm individuals or society as a whole? Undoubtedly. But it's crucial to remember that this potential for unintended harm isn't unique to AI.

Throughout history, countless technological advancements have carried inherent risks. From the invention of the airplane, which revolutionized travel but also introduced the possibility of catastrophic accidents, to the development of automatic doors, which offer convenience but can also malfunction and cause injuries, the potential for unforeseen negative outcomes has always accompanied progress. Even seemingly innocuous innovations like the smartphone, which has transformed communication and access to information, have also brought about concerns regarding privacy, addiction, and social isolation.

The key takeaway here is that just because AI is a relatively new and complex technology, it doesn't automatically make it inherently more dangerous than other innovations. What it demands is a responsible and conscientious approach to its development and deployment. We must prioritize safety, ethics, and transparency throughout the entire AI lifecycle, from research and design to implementation and regulation.

The anxiety surrounding AI is understandable. It stems from a complex interplay of factors, including the portrayal of AI in popular culture, the rapid pace of its development, and the warnings issued by some prominent figures in the field. Dystopian narratives in movies and books have ingrained a sense of fear and mistrust in the collective consciousness, while the exponential growth of AI capabilities can leave people feeling overwhelmed and uncertain about the future. Additionally, the vocal concerns expressed by certain experts, while often well-intentioned, can amplify anxieties and create a sense of impending doom.

The challenge we face is to navigate this complex landscape and foster trust in AI. We must engage in open and honest dialogue about both the potential benefits and risks of this transformative technology. We must strive to educate the public and dispel misconceptions, while also acknowledging legitimate concerns and addressing them proactively. We must work collaboratively across disciplines and sectors to develop ethical frameworks and regulatory guidelines that ensure AI is used for the betterment of humanity. By fostering transparency, accountability, and responsible innovation, we can build a future where AI serves as a powerful tool for positive change, rather than a source of fear and apprehension.

Demystify the hype

Let's break down the buzz around AI and get a clearer picture of its capabilities and limitations. It's important to understand that AI is not some magical force but rather a complex technology with immense potential – and potential risks.

While the idea of AI becoming sentient and taking over might capture our imaginations, it's far from reality. Instead, we should focus on more immediate concerns. The misuse of AI for malicious purposes, the difficulty of ensuring AI aligns with human values, and the potential for AI-powered weapons to fall into the wrong hands are all very real challenges.

The Air Force's responsible stance on AI-powered autonomy highlights the importance of careful consideration in AI development. We must proactively address these pitfalls if we want to fully harness the benefits of AI.

Remember, AI is here to stay. It's not something to be feared but rather embraced as a powerful tool for progress. It's crucial to approach its development ethically and with intention. By fostering a responsible approach, we can ensure that AI serves humanity's best interests and helps us build a better future.

Trust in technology is a gradual process that requires careful nurturing, as exemplified by the AGCAS experience. Even today, aircrew can be hesitant to embrace new autonomous cockpit technologies. This hesitancy can stem from bias against the technology or a lack of understanding of its potential risks and benefits. Both extremes—resistance and overreliance—can be detrimental. This underscores the importance of rigorous testing and specialized expertise in aircraft systems development.

The same principles apply to AI. But how can the average person develop trust in AI?

Learn about the principles of the technology and discuss it with others. For example, Generative AI, like ChatGPT, is essentially an advanced autocomplete tool. While it can create entertaining content, it also has significant limitations. It will improve over time, enhancing information sharing and empowering us, but it won't take over the world.

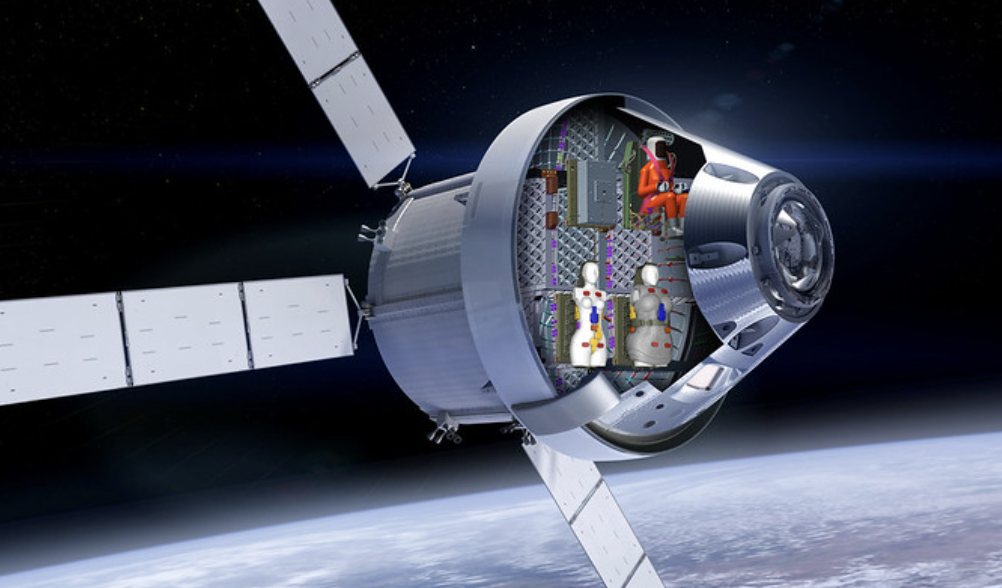

Another example is the Air Force's development of AI-controlled aircraft that can fly in formation. Through rigorous testing, we identify and resolve issues, none of which involve the AI going rogue and attacking humans. This outcome is unlikely because the Air Force uses AI for specific tasks within larger systems controlled by non-AI software. If the AI suggests overly aggressive actions, the system reverts to conventional software, ensuring safety and maintaining human control. This approach allows for the responsible development of AI-powered autonomy while keeping the human operator in charge.

As with all technological advancements, AI development will encounter challenges that skilled experts will address. We should not let fear or ignorance hinder progress. However, it's crucial to demand ethical and transparent AI development from our government, academic institutions, international partners, and industry leaders. Since humans will always interact with AI systems, it's essential to move beyond hype and focus on understanding AI's true capabilities and limitations.

0 Comments