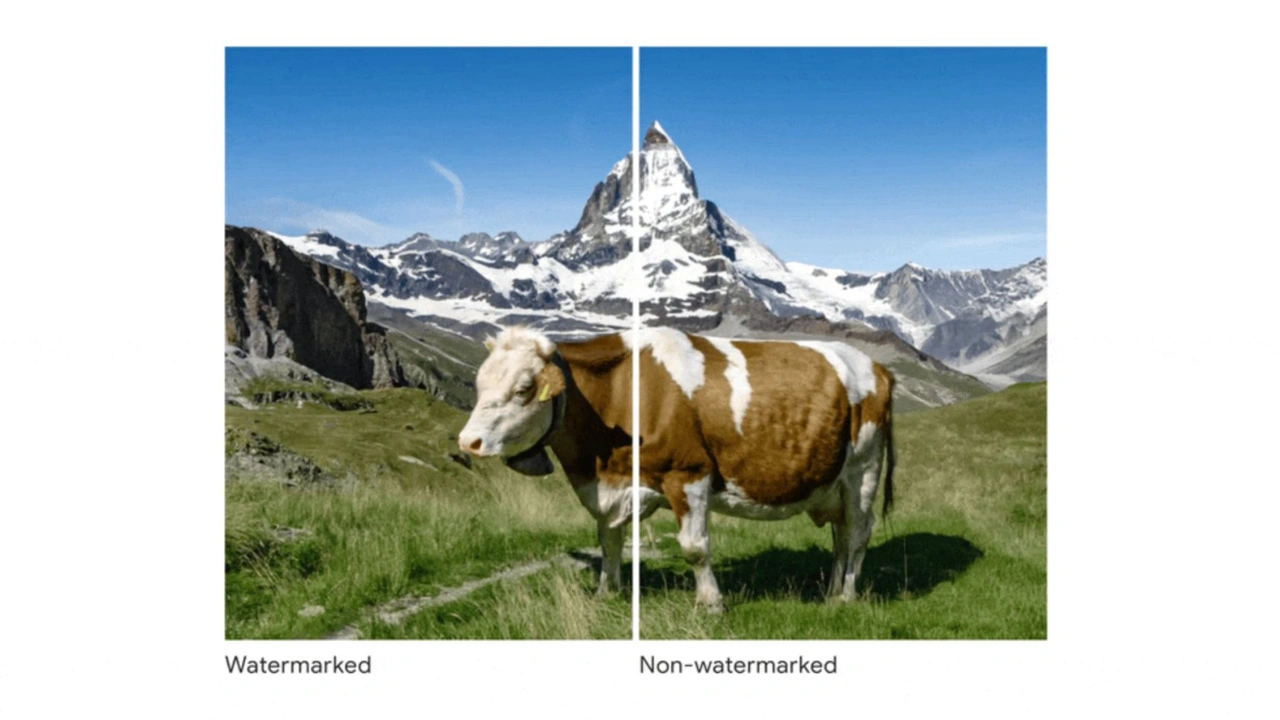

Google Introduces Invisible Watermark for AI-Generated Text

Google has recently rolled out an innovative solution to address the growing concerns surrounding AI-generated content. The company has introduced an invisible watermark designed specifically for text generated by artificial intelligence. This move is part of Google’s broader efforts to maintain content integrity, distinguish AI-generated text from human-written material, and mitigate misuse in a world where AI is increasingly shaping online communication. In this detailed exploration, we will break down the key aspects of this watermark, its functionality, potential implications, and how it will shape the future of AI content in various industries.

[image_url_here]

What is an Invisible Watermark?

An invisible watermark is a subtle marker embedded within the content, which is not visible to the human eye but can be detected through specialized software. This form of watermarking is akin to a digital signature that allows AI-generated text to be identified without altering the content itself. The concept of a watermark has been prevalent in the digital world for years, often used in images and videos. Now, with the proliferation of AI in content creation, this watermark technology has been adapted for textual content as well.

How Does It Work?

The invisible watermark for AI-generated text works by encoding a unique pattern into the text’s structure, such as specific word choices, syntax, or even the way sentences are constructed. These markers are not overtly noticeable, but they leave behind a traceable pattern that can be identified by a machine or algorithm capable of detecting such watermarks.

The technology behind this watermark leverages advanced machine learning models trained to recognize the statistical signatures of AI-generated content. These signatures are embedded in the text generation process, without disrupting the flow or meaning of the content, making them imperceptible to the average reader.

The Importance of Invisible Watermarks for AI-Generated Text

With the rapid advancements in AI, the line between human and machine-created content is increasingly becoming blurred. This has led to numerous challenges across different sectors, from education to journalism, and even legal environments. The introduction of invisible watermarks serves as a significant step in addressing these challenges.

Combatting Misinformation

One of the primary concerns related to AI-generated content is the spread of misinformation. AI systems like OpenAI's GPT and Google's own AI models can produce text that closely mimics human writing, making it difficult for readers to discern the origin of the content. By embedding an invisible watermark, Google aims to provide a way for platforms, educators, and content creators to differentiate between AI-generated and human-written text.

In the fight against misinformation, the ability to trace the source of content becomes invaluable. If the watermark is detectable, it can help identify whether an article or post was created by AI or a human. This can also aid in tracking the flow of misleading content or malicious narratives that are artificially generated.

Enhancing Transparency in AI

AI systems are often black-boxed, meaning the decision-making processes behind their outputs can be obscure. By introducing this invisible watermark, Google is enhancing transparency in AI-generated content. The ability to distinguish AI-written text allows for greater accountability in the way AI systems are used, particularly in media, academic settings, and content creation industries.

The watermark provides a means to track and verify content, encouraging responsible usage of AI in environments where factual accuracy and authorship are critical. It also helps institutions, such as universities or news outlets, maintain editorial standards and prevent AI from being used to generate misleading or plagiarized content.

[image_url_here]

Potential Implications of Invisible Watermarks

The introduction of invisible watermarks for AI-generated text could have far-reaching implications for various industries. Below are some key areas where this technology could have a significant impact:

Impact on Content Creation and Journalism

Content creators, especially journalists, have been concerned about the authenticity of the content they produce. With AI becoming more prevalent in writing tasks, there is an increasing risk of AI being used to produce articles or stories that appear human-authored. This could undermine the credibility of content if the AI’s involvement is not disclosed.

Invisible watermarks can help solve this problem by ensuring that AI-generated content is clearly identified, which could lead to more ethical content production practices. Journalistic integrity, particularly in fields that require fact-checking and transparency, can be better upheld when the origin of the text is clear.

Impact on Education

In the educational sector, AI is increasingly being used to assist students in writing essays, reports, and other assignments. However, the use of AI in academic settings raises concerns about plagiarism and the authenticity of students' work.

With an invisible watermark, educational institutions could detect when AI has been used to generate assignments. This would give educators a tool to better assess whether a student has genuinely contributed to their work or if they have relied too heavily on AI tools. This technology could lead to more accurate plagiarism detection, ensuring that students' work is both original and appropriately sourced.

Legal and Ethical Concerns

As AI becomes more integrated into our digital lives, the legal landscape must adapt to the evolving challenges it presents. For instance, if a piece of AI-generated content is used in legal proceedings or contracts, it becomes crucial to establish whether that content was produced by a human or an AI system. Invisible watermarks could be used as part of a broader regulatory framework to ensure that AI-generated content is clearly labeled and properly attributed.

Additionally, there may be ethical concerns around privacy and consent. Since the watermark is invisible, it raises questions about whether individuals are aware of the AI’s involvement in creating content. There could also be issues with how this data is stored and accessed by platforms using the technology.

Potential for Misuse

While the watermark system offers many benefits, there is also the potential for misuse. Bad actors could manipulate the watermark system to disguise AI-generated content as human-written. Therefore, it would be essential for companies like Google to continuously refine and improve the watermarking technology to ensure its robustness against such manipulation.

How Google Plans to Implement the Watermark

Google’s approach to embedding invisible watermarks in AI-generated text involves integrating this technology into their existing suite of tools and services. The watermarking system will be part of Google’s broader AI infrastructure, making it accessible to developers and content creators across different platforms.

For example, Google might integrate the watermarking feature into its AI content generation tools like Google Bard or its search algorithm, allowing users to identify AI-generated text on websites, in news articles, or across social media platforms.

Developer Tools and Integration

For developers, Google may provide APIs or SDKs to enable seamless integration of the watermark detection technology into existing platforms. This would allow websites, content creators, and tech companies to adopt the watermarking system in their own AI tools, making it a widely accepted practice across industries.

Google’s commitment to transparency is also reflected in its open approach to AI ethics. By offering such tools, they aim to empower developers and organizations to use AI responsibly and to combat the potential negative consequences of AI-generated content.

The Future of AI-Generated Content and Watermarking Technology

As AI continues to evolve, the role of invisible watermarks may expand and become even more integral to the digital ecosystem. In the future, it is likely that more sophisticated watermarking systems will be developed, potentially incorporating blockchain technology or other advanced methods for verifying the origin and integrity of AI-generated content.

The Role of AI Ethics in Content Generation

The growing presence of AI in content generation raises important ethical questions. As more organizations adopt AI systems for creating written content, questions around copyright, intellectual property, and authorship will become increasingly complex. Watermarking technology is one step in addressing these challenges, but it will likely require further advancements in AI ethics to fully tackle the complexities of AI-generated content.

The Emergence of New Standards

Google’s introduction of invisible watermarks could pave the way for the establishment of new standards in AI content generation. Over time, as more companies adopt similar technologies, it is possible that the use of invisible watermarks will become a standard practice in AI-driven industries. This could lead to a more transparent digital landscape, where AI-generated content is clearly labeled and easily identifiable.

Conclusion

Google’s introduction of invisible watermarks for AI-generated text is a groundbreaking move in the evolving landscape of digital content. This technology aims to address critical concerns about transparency, misinformation, and the ethical use of AI in content creation. By offering a subtle but powerful solution, Google is leading the charge in ensuring that AI-generated content can be identified, tracked, and held accountable.

As AI technology continues to advance, the use of invisible watermarks is expected to grow and evolve, playing an essential role in the ongoing conversation about the future of AI and its impact on society. Through continuous innovation and ethical practices, Google is working to ensure that AI’s role in content generation remains responsible, transparent, and beneficial for all.

0 Comments